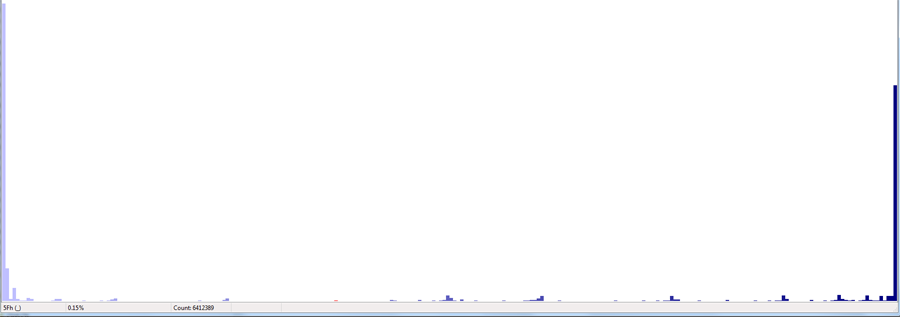

Non-uniform byte distribution in an uncompressed 4GB EGTB file:

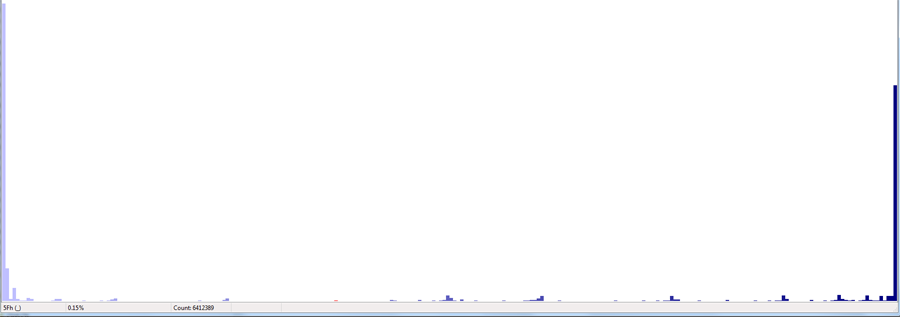

Uniform byte distribution after compression of this file at ~ 20 to 1:

Regards,

Forrest

Moderator: Ras

Duh... Have fun.Zenmastur wrote:I didn't actually test it on any of your files since I don't have uncompressed versions of them, but the statistics I was looking at came from KRBvsKBP.RTBZ which is one of the largest file.syzygy wrote:I'm sure you haven't tested on the bigger files (the ones that matter).Zenmastur wrote:I don't know what compression ratio your getting but it looks like it's possible to compress the files another 25-40%.

Since I limit the compression to 65536 values per 64-byte block, the most highly compressible tables can probably be compressed further. But the bigger tables are 98% or so random data for any general purpose compresser (only the index tables can be compressed a bit). Those are the tables that matter. Try it.

It makes no sense whatsoever to hold a pawny EGT in RAM. It would just be occupying RAM with data that is not used, and would never be used. 8GB RAM should be more than enough to hold all the data you need (uncompressed) for building any pawny 7-men without any speed loss.Zenmastur wrote:Assuming a modest 12 to 1 compression (I actually expect closer to 16 to 1) ratio a machine with 128-Gb of ram could hold the entire contents of a 1.44 TB EGTB and still have 8-GB left over for the OS and generation program.

These aren't general purpose table-bases. i.e Not all of the possible positions are considered, just those required to solve for the specific board position under consideration. They're not designed to be used as part of a chess playing program per say. Most of these positions have more than 7 men in them. E.G. I have done one position with 14 pieces (mostly pawns that are locked) although most have 8-12 men. e.g.hgm wrote:It makes no sense whatsoever to hold a pawny EGT in RAM. It would just be occupying RAM with data that is not used, and would never be used. 8GB RAM should be more than enough to hold all the data you need (uncompressed) for building any pawny 7-men without any speed loss.Zenmastur wrote:Assuming a modest 12 to 1 compression (I actually expect closer to 16 to 1) ratio a machine with 128-Gb of ram could hold the entire contents of a 1.44 TB EGTB and still have 8-GB left over for the OS and generation program.

Sure. They are basically just non-symmetric 4-men EGT, requiring only 256KB each if you store 1 byte per position (btm 7-bit DTM and wtm won/non-won bit).Zenmastur wrote:So what you are saying is that I should be able to everything needed to solve these position into a reasonable amount of ram with-out using compression, is that correct?

I'm not sure I understand your explanation, but I am sure I don't how you think this method will speed up the generation process. If I can store the whole database in memory, I only have to write once to disk. e.g.hgm wrote:Sure. They are basically just non-symmetric 4-men EGT, requiring only 256KB each if you store 1 byte per position (btm 7-bit DTM and wtm won/non-won bit).Zenmastur wrote:So what you are saying is that I should be able to everything needed to solve these position into a reasonable amount of ram with-out using compression, is that correct?

You would need to hold the P-slice you are working on, and the successors to seed and probe it. And the number of pre-decessors is typically the number of Pawns. (But those on 2nd-rank count double, as as well as those being able to do PxP captures, and there could be twice as many really tiny capture successors.)

Of course you would have to write the results to disk anyway, if you are not building only to obtain statistics, and loading back the P-slices you need for your next build wouldn't really slow you down, as it is sequential access on 256KB chunks of disk space, which can be stored contiguously. So 2MB RAM would be enough for those positions. You can build them entirely in the L2 cache of a Core 2 Duo!

With a little bit more RAM you could already load the successor P-slices you need for your next build into RAM while you are working on the current build, as asynchrounous I/O. (In so far they don't overlap with the successors of the current build.)