With this type of testing, it depends on the number of occurrences the variable can change. Material values occur on every node, so 1000 positions can give surprisingly useful results. On the other hand, if one were testing the number of occurrences the rook attacks the king on a half-open file, there may be no more than 20 occurrences in 100,000 positions. That would be insufficient to verify a useful result. I use a "found occurrence" counter to verify a minimum or the result is rejected.hgm wrote:Well, 1000 positions does not sound like very much. How many of those would have been end-game positions where one of the sides was a minor ahead? And how well were these finally fitted?

Ab-initio evaluation tuning

Moderators: hgm, Rebel, chrisw

-

D Sceviour

- Posts: 570

- Joined: Mon Jul 20, 2015 5:06 pm

Re: Ab-initio evaluation tuning

-

Evert

- Posts: 2929

- Joined: Sat Jan 22, 2011 12:42 am

- Location: NL

Re: Ab-initio evaluation tuning

Indeed. That is true even if you don't use tablebases because this specific knowledge is not encoded in the piece values themselves.AlvaroBegue wrote: I would discard any positions with 6 men or fewer, since we can get those from EGTBs. That should take care of most of the special cases.

Similarly, positions that depend on themes like "wrong bishop" or "opposite bishops" should be discarded. It's actually not so obvious if they have been, without having a program filter through the set. I don't know if that was done, and writing code to handle that takes more time than I have at the moment.

Of course, in the end of the day, a full evaluation function might have such knowledge, and then tuning it on the entire data set is correct, so perhaps this isn't a problem in practice. It's not like you would not adjust the piece values afterwards.

It appears to be similar, yes:I would also be curious if you get the same results using my collection of positions: https://bitbucket.org/alonamaloh/ruy_tu ... th_results

These were collected from calls to the evaluation function in RuyDos. I labelled them with a result by playing a very quick game of Stockfish against itself. I then replaced each position with the position that the quiescence search score came from.

Code: Select all

MG EG

P 0.67 1.00

N 3.18 2.33

B 3.30 2.44

R 4.34 4.38

Q 8.56 8.56

BB 0.06 0.23

-

zenpawn

- Posts: 349

- Joined: Sat Aug 06, 2016 8:31 pm

- Location: United States

Re: Ab-initio evaluation tuning

Stockfish apparently does this too, per the comment "Second-degree polynomial material imbalance by Tord Romstad." Could someone please explain how this works and/or point me to something that would? Thanks.Evert wrote:(the material evaluation is a general quadratic function to handle material imbalances)

-

Evert

- Posts: 2929

- Joined: Sat Jan 22, 2011 12:42 am

- Location: NL

Re: Ab-initio evaluation tuning

Ok, I did some quick statistics for the positions included. I counted the number of positions in the sets that have <= 6 men (these are tablebase positions, and may have unusual material combinations that make them unsuitable for general evaluation tuning). I counted 97277 of these in quiescent_positions_with_results (Álvaro Begué's dataset, hereafter AB), and 63076 in quiet-labeled (Alexandru Mosoi's dataset, hereafter AM). These I should probably discount, at least for now.

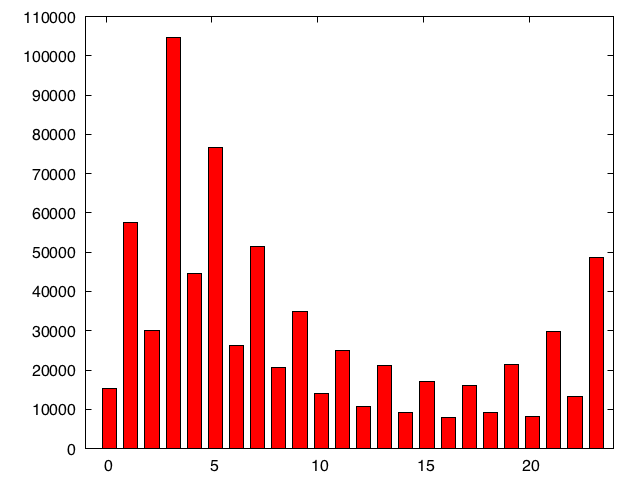

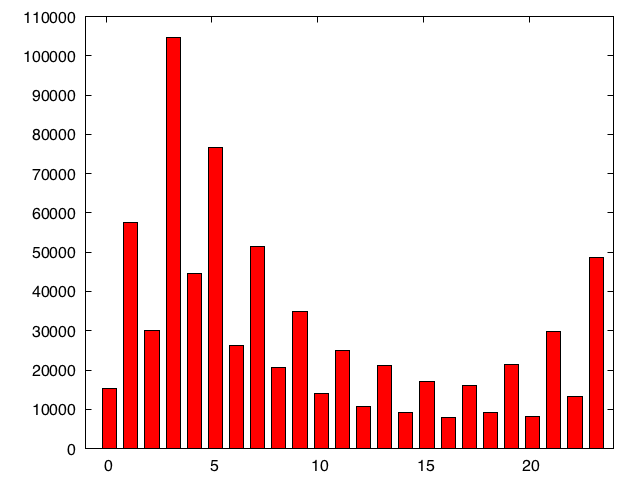

I also made a histogram of the number of positions included against game phase, calculated in the Fruit way: phase = 4*minors+2*rooks+4*queens. So phase=24 means opening material (no pieces exchanged) and phase=0 means pawn ending (all pieces exchanged).

For AB I get:

For AM I get:

Both sets appear to be biased to late-phase positions. The phase is odd if there is a material imbalance of a minor (or three minors versus a queen). These positions are oddly overrepresented, but that may not be an issue: positions where material is equal tell you nothing about material balance anyway, so those should probably be taken out of my current tuning run.

I'll take out positions with too few pieces and without a material imbalance and report back on the results.

I also made a histogram of the number of positions included against game phase, calculated in the Fruit way: phase = 4*minors+2*rooks+4*queens. So phase=24 means opening material (no pieces exchanged) and phase=0 means pawn ending (all pieces exchanged).

For AB I get:

For AM I get:

Both sets appear to be biased to late-phase positions. The phase is odd if there is a material imbalance of a minor (or three minors versus a queen). These positions are oddly overrepresented, but that may not be an issue: positions where material is equal tell you nothing about material balance anyway, so those should probably be taken out of my current tuning run.

I'll take out positions with too few pieces and without a material imbalance and report back on the results.

-

Evert

- Posts: 2929

- Joined: Sat Jan 22, 2011 12:42 am

- Location: NL

Re: Ab-initio evaluation tuning

I think Tord's inspiration came from the bishop pair bonus and the various imbalance/scaling rules in the paper by Larry Kaufman (knight and rook "redundancy" and scaling with remaining pawns).zenpawn wrote: Stockfish apparently does this too, per the comment "Second-degree polynomial material imbalance by Tord Romstad." Could someone please explain how this works and/or point me to something that would? Thanks.

My own thinking is the following. The material evaluation M is an unknown function of the number (and type) of white pieces W and the number (and type) of black pieces B, so we have M(W, B). We don't know this function, but we do know some of its properties. In particular, M(W, B) = -M(B, W). You can then try to write a general series expansion (think of it as a Taylor series where you don't know the derivatives) and determine the coefficients by fitting to data. To lowest order:

Code: Select all

M(W, B) = Mp*Wp + Mn*Wn + Mb*Wb + Mr*Wr + Mq*Wq - (W<=>B)

= Mp*(Wp-Bp) + Mn*(Wn-Bn) + Mb*(Wb-Bb) + Mr*(Wr-Br) + Mq*(Wq-Bq)

You can carry the expansion to quadratic order. This gives you three types of terms.

The first is the straight square of each piece type, for instance Mbb*Wb*Wb. This is (effectively) the bishop pair bonus, generalised to the other piece types.

The second are symmetric cross-terms for your own pieces. These are synergies; I can't think of any of the top of my head, but this lets you give different values for the imbalance RN vs RB, for instance.

The third are cross-terms with enemy pieces. These let you assign a material score to Q vs RR, for instance. They also give you the lowest order "elephantiasis" effect (major pieces have their value depressed by the presence of opposing minors); you can find a number of threads discussing this in the context of QQQ vs NNNNNNN.

That's it in a nutshell. I hope that wasn't too technical. In principle you can carry the expansion further to cubic terms, which would let you include Q vs BBN and Q vs BNN. I think the only other important terms there are RR vs BBN, RR vs BNN and R vs BN (R vs BB and R vs NN are quadratic terms), but perhaps there are pawn terms that are important too, I don't know. I don't think anyone has tried to do this, but I might throw in some cubic terms once I get the quadratic stuff working as intended.

-

kbhearn

- Posts: 411

- Joined: Thu Dec 30, 2010 4:48 am

Re: Ab-initio evaluation tuning

It's possible the 2nd and 3rd order terms might allow you to not worry about discarding so many positions. i.e. instead of a presence of positions where an extra knight draws because there's no pawns on your side dragging it down for all positions erroneously, some of the knight's endgame value might be translated into the WnWp term - the WnWpWp term might also be of importance to then counter too much value in the WnWp term for high pawn counts.Evert wrote:I think Tord's inspiration came from the bishop pair bonus and the various imbalance/scaling rules in the paper by Larry Kaufman (knight and rook "redundancy" and scaling with remaining pawns).zenpawn wrote: Stockfish apparently does this too, per the comment "Second-degree polynomial material imbalance by Tord Romstad." Could someone please explain how this works and/or point me to something that would? Thanks.

My own thinking is the following. The material evaluation M is an unknown function of the number (and type) of white pieces W and the number (and type) of black pieces B, so we have M(W, B). We don't know this function, but we do know some of its properties. In particular, M(W, B) = -M(B, W). You can then try to write a general series expansion (think of it as a Taylor series where you don't know the derivatives) and determine the coefficients by fitting to data. To lowest order:Here (Wp, Wn, Wb, Wr, Wq) are the number of White pawns, knights, bishops, rooks and queens, and (W<=>B) means "repeat previous terms with White and Black interchanged". The coefficients (Mp, Mn, Mb, Mr, Mq) are just the normal piece values.Code: Select all

M(W, B) = Mp*Wp + Mn*Wn + Mb*Wb + Mr*Wr + Mq*Wq - (W<=>B) = Mp*(Wp-Bp) + Mn*(Wn-Bn) + Mb*(Wb-Bb) + Mr*(Wr-Br) + Mq*(Wq-Bq)

You can carry the expansion to quadratic order. This gives you three types of terms.

The first is the straight square of each piece type, for instance Mbb*Wb*Wb. This is (effectively) the bishop pair bonus, generalised to the other piece types.

The second are symmetric cross-terms for your own pieces. These are synergies; I can't think of any of the top of my head, but this lets you give different values for the imbalance RN vs RB, for instance.

The third are cross-terms with enemy pieces. These let you assign a material score to Q vs RR, for instance. They also give you the lowest order "elephantiasis" effect (major pieces have their value depressed by the presence of opposing minors); you can find a number of threads discussing this in the context of QQQ vs NNNNNNN.

That's it in a nutshell. I hope that wasn't too technical. In principle you can carry the expansion further to cubic terms, which would let you include Q vs BBN and Q vs BNN. I think the only other important terms there are RR vs BBN, RR vs BNN and R vs BN (R vs BB and R vs NN are quadratic terms), but perhaps there are pawn terms that are important too, I don't know. I don't think anyone has tried to do this, but I might throw in some cubic terms once I get the quadratic stuff working as intended.

-

Ferdy

- Posts: 4833

- Joined: Sun Aug 10, 2008 3:15 pm

- Location: Philippines

Re: Ab-initio evaluation tuning

One reason could be in the training set coupled with the available tuned eval features, here is one scenario - winning side has no minor and losing side has minor (or losing side minor > winning side minor), now reducing the minor value would increase the winning side score (the goal). This is aggravated because other eval features of losing side are not available to counter the material score of the winning side.Evert wrote:I've been writing up a new evaluation function from scratch, and one of the things I've been looking into is tuning it from the beginning. Right now it only has material evaluation (the material evaluation is a general quadratic function to handle material imbalances), and I'm trying to tune the piece values. The results confuse me a bit, however.

Some details:

1. By "tune" I mean that I filter the evaluation function through a logistic that maps the evaluation to a game result (0...1, with 0=black wins, 1=white wins, 0.5=draw). The idea is pretty standard. I use f(x) = 1/(1+exp(-k*x)), with x the evaluation in pawn units and k a scaling constant (which happens to be 1 according to a monte-carlo best parameter estimate).

2. I use the test position set by Alexandru Mosoi (http://talkchess.com/forum/viewtopic.php?p=686204).

3. To fit the data, I use stochastic gradient descent with 1000 positions in each estimate for the gradient. I haven't tried anything more fancy, but I did first try the Simplex algorithm from GSL as an alternative. This works ok too.

4. In the evaluation, I have fixed the value of a pawn in the end game (VALUE_P_EG) at 256. This fixes the scale for the evaluation, which is otherwise arbitrary.

5. During tuning, evaluation parameters are treated as double-precision floating point numbers.

6. I started with 11 parameters to tune: piece values for N, B, R, Q in MG and EG, pawn value in MG and bishop pair bonus for MG and EG (this is in fact just the quadratic term in the material evaluation).

The initial parameters areAfter tuning, I getCode: Select all

MG EG P 0.80 1.00 N 3.25 3.50 B 3.25 3.50 R 4.50 5.50 Q 9.00 9.75 BB 0.00 0.00What I find particularly odd is the lower end-game value of the minor pieces. Without playing any games (which is a while away yet), they look wrong to me. With these values, an engine that is a minor ahead would adopt a trade-avoiding strategy (because his minor would get devalued) unless it can get an extra pawn in the bargain. Conversely, an engine that is a minor behind will gladly exchange material.Code: Select all

MG EG P 0.96 1.00 N 3.46 2.39 B 3.37 2.54 R 4.40 4.70 Q 8.79 9.29 BB 0.17 0.21

This makes me wonder if there's something I'm overlooking in how I've implemented my optimiser. What I'm wondering is whether anyone else has done a similar experiment with similar results? In particular, I would like to know if anyone has tried to match Alexandru Mosoi's dataset with just material and arrived at "correct" piece values that represent the data.

An example, perhaps there are others.

[d]8/3k4/4r3/2Rp4/1P2bR1P/5p1K/8/8 w - - c9 "1-0";

-

hgm

- Posts: 27789

- Joined: Fri Mar 10, 2006 10:06 am

- Location: Amsterdam

- Full name: H G Muller

Re: Ab-initio evaluation tuning

Actually he might have gotten it from me: I remember a thread here where I proposed a Bishop-dependent Pawn value, to be handled through the Pawn hash. Upon which Tord remarked that we needed a Pawn-dependent Bishop value, and that I pointed out this is the same, and just a quadratic cross term between Bishops and Pawns.Evert wrote:I think Tord's inspiration came from the bishop pair bonus and the various imbalance/scaling rules in the paper by Larry Kaufman (knight and rook "redundancy" and scaling with remaining pawns).

http://talkchess.com/forum/viewtopic.php?p=136647

Tord Romstad wrote:Clever! That would probably work. It seems very obvious in hindsight, but I didn't think of it.hgm wrote:Yes, but making the value of a Bishop dependent on the number of Pawns is equivalent to making the base value of a Pawn dependent on the number of Bishops.

Tord

-

Evert

- Posts: 2929

- Joined: Sat Jan 22, 2011 12:42 am

- Location: NL

Re: Ab-initio evaluation tuning

Ok, with 6 men (and fewer) positions and balanced positions removed, I get

Compared to the previous results, the MG value for the pawn is smaller (not sure I understand that). Comparing the two different sets, I actually get consistent results for the value of the Rook and the EG value of the minor pieces. The value of the Queen still comes out a bit lower for the AB set than the AM set, but the difference is smaller. What seems to be a persistent feature though is that the EG value of the minors comes out as smaller than the MG value, which I still don't think can be correct when it comes to playing games. The difference is not as dramatic as it was though.

Code: Select all

AM AB

MG EG MG EG

P 0.81 1.00 0.70 1.00

N 3.29 2.72 3.02 2.73

B 3.20 2.84 3.07 2.85

R 4.15 5.09 4.15 4.95

Q 9.00 9.62 8.82 9.35

BB 0.11 0.21 0.09 0.25

-

Evert

- Posts: 2929

- Joined: Sat Jan 22, 2011 12:42 am

- Location: NL

Re: Ab-initio evaluation tuning

Ok, that makes sense. Then the cure is indeed to add more evaluation terms and improve the evaluation that way.Ferdy wrote: One reason could be in the training set coupled with the available tuned eval features, here is one scenario - winning side has no minor and losing side has minor (or losing side minor > winning side minor), now reducing the minor value would increase the winning side score (the goal). This is aggravated because other eval features of losing side are not available to counter the material score of the winning side.

An example, perhaps there are others.

[d]8/3k4/4r3/2Rp4/1P2bR1P/5p1K/8/8 w - - c9 "1-0";

Something else that bothers me: we're trying to fit the continuous outcome of a logistic function to the ordinal outcome of the game. I suspect it might be better to assign each position a value in the range 0..1 based on a number of games played from that position, with the value determined by the average score (the fraction of points won by White). The computation cost to do that is huge though...